Narrative Writing Prompts Grade 4 Jan 29 2025 nbsp 0183 32 DeepSeek is one of the latest models gaining attention as a potential alternative to GPT 4o But how practical is it We looked at its performance costs and real world usability

Feb 3 2025 nbsp 0183 32 Industry experts speculate that the attacks could be linked to geopolitical tensions surrounding DeepSeek s rapid rise and regulatory scrutiny Meanwhile the U S Commerce Here s why DeepSeek is worth your attention 1 Unmatched Language Recognition DeepSeek has proven to be far better at recognizing and responding to languages like Slovenian a task

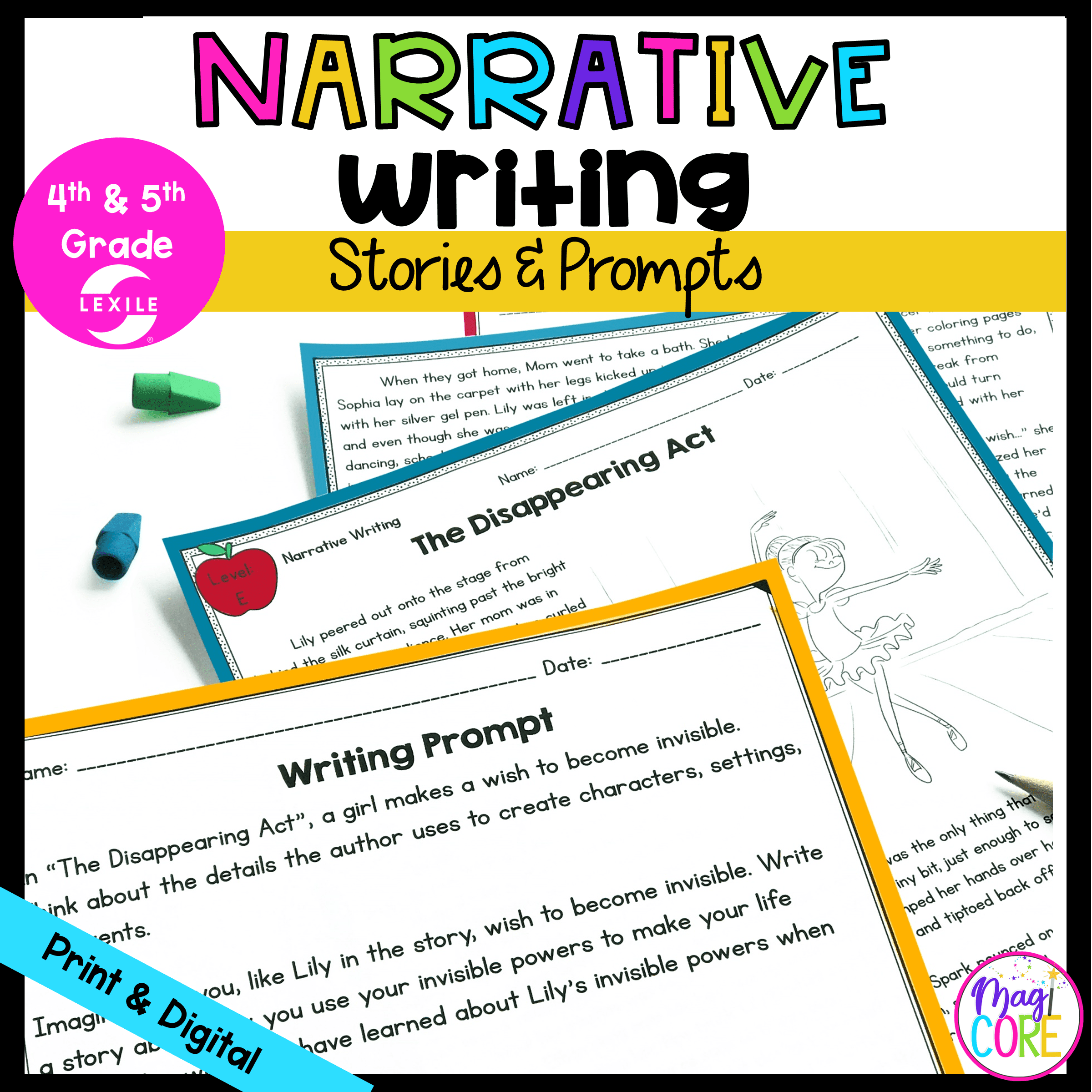

Narrative Writing Prompts Grade 4

Narrative Writing Prompts Grade 4

Narrative Writing Prompts Grade 4

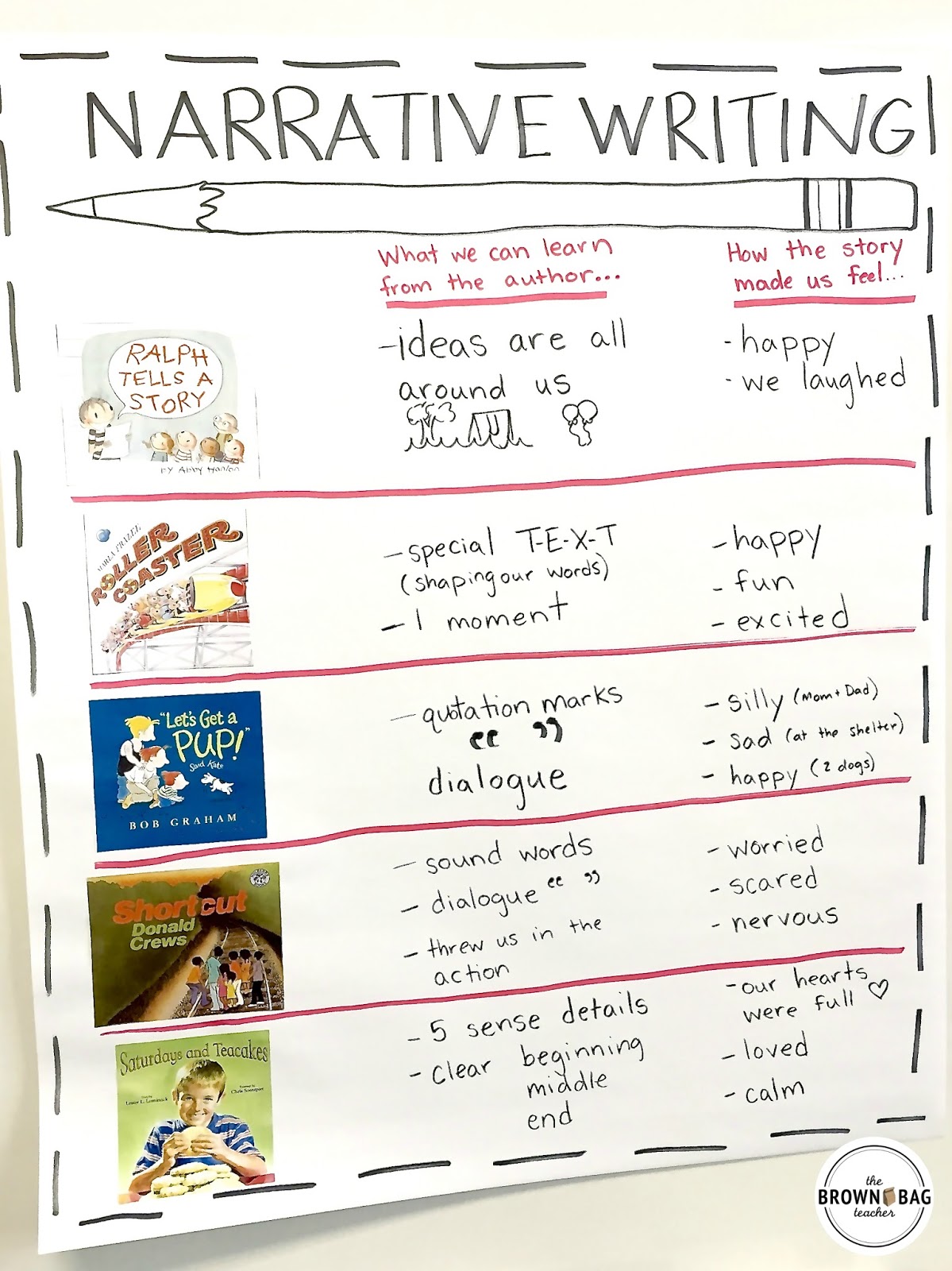

https://2.bp.blogspot.com/-U8qAdcHrzKU/Vljh4tMLDHI/AAAAAAAAJfI/aiLNPqtYvUc/s1600/Narrative%2BWriting%2BAnchor%2BChart.jpg

Feb 9 2025 nbsp 0183 32 DeepSeek not only focuses on the accuracy of information but also pays attention to the individual needs of users It recommends relevant content to users based on their search

Pre-crafted templates use a time-saving solution for developing a diverse range of files and files. These pre-designed formats and designs can be used for various personal and expert tasks, including resumes, invites, flyers, newsletters, reports, presentations, and more, streamlining the material creation process.

Narrative Writing Prompts Grade 4

4th Grade Narrative Writing Prompts Ubicaciondepersonas cdmx gob mx

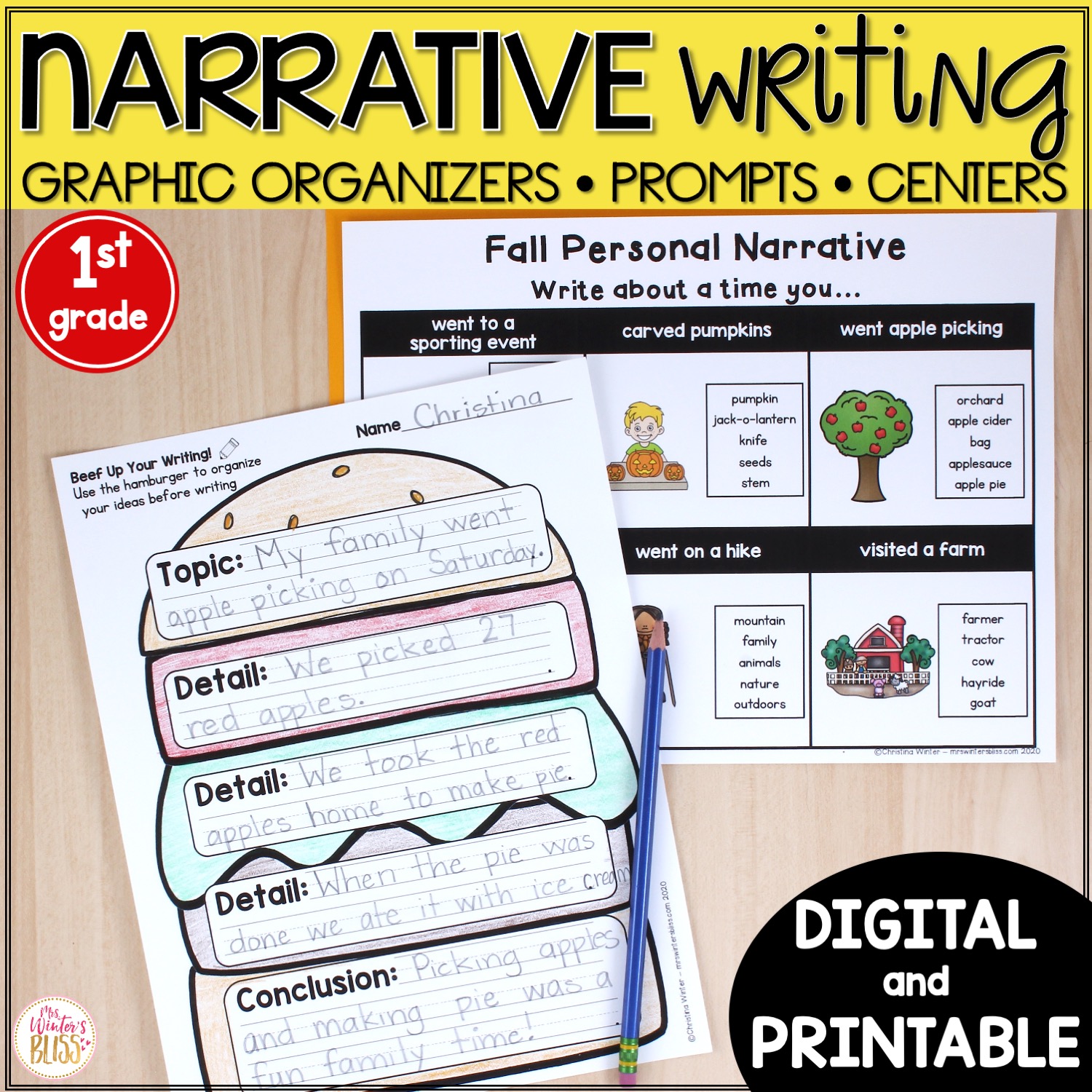

First Grade Narrative Writing Prompts Terrific Teaching Tactics

2nd Grade Narrative Writing Prompts SMI png

Narrative Writing Prompts 1st Grade Ubicaciondepersonas cdmx gob mx

Picture Writing Prompts For The Elementary Classroom Hojo s Teaching

Narrative Prompts Teacher Resources And Classroom Games Teach This

https://www.linkedin.com › pulse › whats-deepseek-buzz...

Jan 30 2025 nbsp 0183 32 If you haven t been paying attention now is the time because DeepSeek s latest model R1 is creating a frenzy in AI investment and geopolitics It s fast it s efficient it s open

https://intoai.pub › multi-head-latent-attention-is-the

Attention is a mechanism that allows a model to focus on different parts of the input sequence when making predictions The mechanism weighs the importance of each token in the

https://www.threads.net › @googlesuperbug › post

Jul 9 2023 nbsp 0183 32 Save yourself The world s biggest cyber threat The dangerous google super bug that hurts you Follow like to inform In a friendly and confidential way I announced the

https://www.instagram.com › googlesuperbug › reels

1 318 Followers 3 962 Following 22 Posts Grok amp DeepSeek Nouri Google Microsoft Super Bug finder googlesuperbug on Instagram quot NoToCensorship DeepSeek The Best Ideator

https://towardsdatascience.com

Jan 31 2025 nbsp 0183 32 Training of DeepSeek V3 including pre training finetuning and RL alignment phases This article mainly focuses on Multi head Latent Attention which was first proposed in

[desc-11] [desc-12]

[desc-13]